This lecture introduces the concept of probability and basic probability laws. Applications are deferred to next lecture.

There are two types of lecturers: one that follows strictly a plan, being it in the form of Powerpoint slides, lecture notes, or a textbook, and the other jumping around, even though they had a plan. Apparently, I belonged to the second kind. The only plan that I followed was the following, informal definition of probability:

Probability is a measure of how likely it is that something will happen, or that a statement is true.

This teaching approach requires students to do their own readings. To me, learning involves two processes: private learning and absorbing; and discussions and debates. The purpose of discussions is to help integrate the new knowledge into our own existing knowledge network – a process of Bayesian updating.

Back to the informal definition of probability given above, I forgot where I took this definition – likely from Wikipedia – but this definition fits my purpose of emphasizing the double faces of probability: that is, a probability model can be applied to both a natural system (e.g., a building, a transport network), which is objective, and a decision system (e.g., a design process, an asset management planning process).

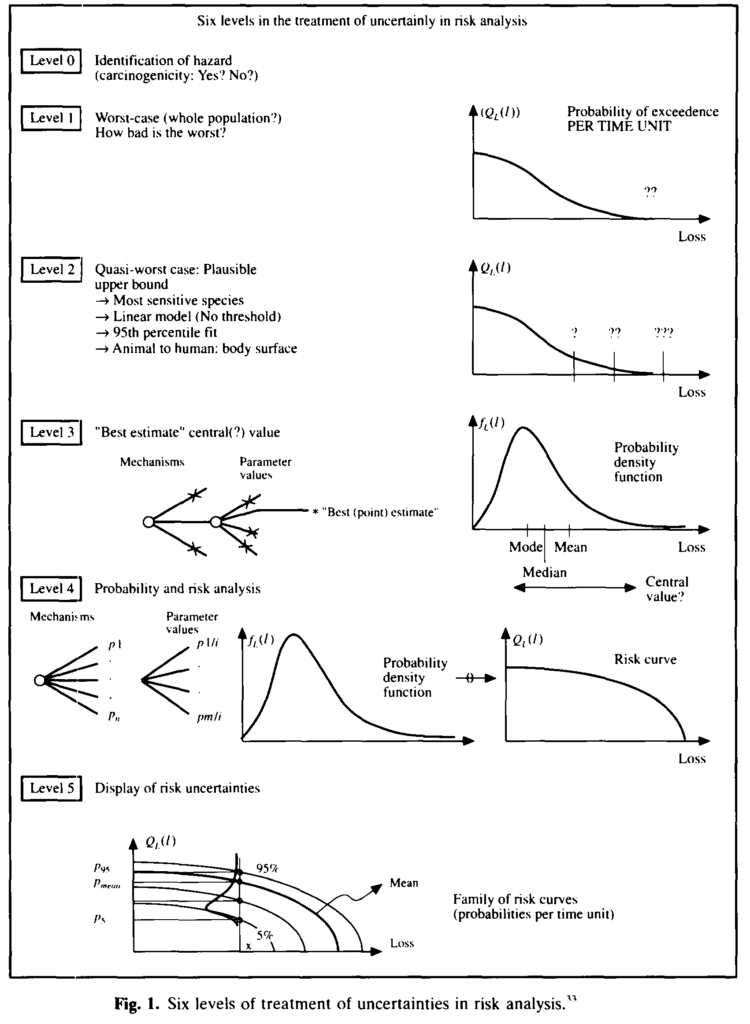

I provided students with a lecture note. The note includes a section “What is probability?” to explain various interpretations of probability. Although there are at least six different interpretations, my notes focus on only the classical, frequentistic, and Bayesian interpretations. I decided not to discuss this during my lecture. They are interesting and hard topics. A thorough discussion of these interpretations will require more than 3 hours, which I cannot afford. On the other hand, not every student is philosophically oriented. From applications point of view, most of students probably just want to know which stands you are taking and why. This is probably a safe approach.

My selection is a Bayesian one. From a pragmatic view, a coin to be tossed and a coin that’s covered on the desk are, to me (a modeller), is no difference. They both can be modelled by a probability model. Unless there is a way (or we can afford to take the way) to collect all necessary data for the coin dynamics from the start of tossing to the landing, these two coins can take the same probability model. From a theoretic view, separating probability into frequentistic probability and subjective (or Bayesian) probability is a fact of history of knowledge discovery, but we do not need to fall into the trap of history. All knowledge is relative; using the jargon of probability, conditional! All theories has a boundary. Therefore, a full discourse of any serious matter must take the background knowledge (or assumptions) into consideration. This is the essence of risk analysis, and this is the major reason that we do not use ‘risk-based’ decision making, but rather ‘risk-informed’ decision making. Separating probability into subjective and objective probabilities will create ourselves a new trap: what is the sum (or product) of an apple and an orange?

Suppose the coin to be tossed is modelled by an ‘objective’ probability, and the coin being covered on the desk by a ‘subjective’ probability. If either of the coin is on heads, take 1, otherwise 0. Ask: what is the probability that the sum of the two coins will be 1? There are three possibilities: 0 (TT), 1 (HT & TH), and 2 (HH). The answer is 50%. Is it similar to like adding an apple to an orange?

Why do we need to formalize the definition of probability?

Andre Kolmogorov axiomatized the probability theory. As an engineering student, do we really need to care the theory?

I provided in the lecture two motivational examples. One is taken Khaneman’s Thinking – Fast and Slow:

Linda is thirty-one years old, single, outspoken, and very bright. She majored in philosophy. As a student, she was deeply concerned with issues of discrimination and social justice, and also participated in antinuclear demonstrations.

Does Linda look more like

- A. A bank teller,

- B. An insurance salesperson, or

- C. A bank teller active in the feminist movement?

This example shows the significance of following probability laws if daily reasoning.

The second motivational example is the famous Bertrand’s paradox:

A random chord is drawn on the unit circle. What is the probability that its length exceeds √3, the length of the side of the equilateral triangle inscribed in the unit circle?

What do we mean by randomness? Three different definitions of ‘a random chord’ give three different estimation of the probability: 1/2, 1/3, and 1/4. Amazing!

Every Probability is Conditional!

Conditional probability of A given B is defined as Pr(A|B) = Pr(AB)/Pr(B). The event B becomes a normalizer. Based on this, we can take Pr(A) as Pr(A|O), where O here represents the sample space $\Omega$ (thanks TMU’s wordpress, it doesn’t support Latex writing!).

Two events A and B are said to be independent of each other if Pr(A|B) = Pr(A), or Pr(B|A) = Pr(B), or Pr(AB) = Pr(A)xPr(B). In daily language, the independence basically means that knowing a new information B does not change your estimation (or belief) of the probability of A.

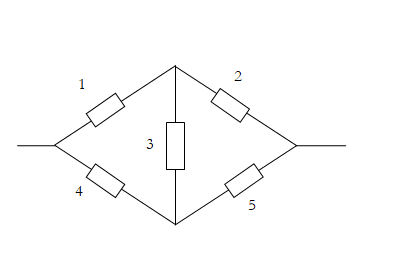

A common confusion that beginners often have is between the notion of mutual exclusion and statistical dependence. Events A and B are mutually exclusive if Pr(AB) = 0. In the Venn’s diagram, A and B have no overlapped area. So mutual exclusion can be easily visualized. Independence is very difficult to visualize, although an area analogy to probability may be helpful – that is, think of Pr(A) being the proportion of the whole area of the sample space, Area(A)/Area(O); then Pr(A|B) becomes Area(AB)/Area(B). If the two proportion of A to Omega happens to the same as the proportion of AB to B, then A and B are independent.

Conditional Independence and Bayesian Network

Two events A and B are said to be conditional independent of each other given C, if Pr(AB|C) = Pr(A|C) Pr(B|C).

The conditional independence is a powerful assumption for dealing with complex joint events. A special case is the Markov property:

Pr(X1 X2 … Xn) = Pr(X1) Pr(X2|X1) Pr(X3|X2) … Pr(Xn|Xn-1)

A modern application of the conditional independence is through the notion of Bayesian network, which will be explained in the next or further next lecture.

Total Probability Formula

The total probability formula is a powerful tool in probabilistic risk analysis. The formula looks naively simple:

Pr(A) = Pr(AE1) + … + Pr(AEn) = Pr(A|E1) Pr(E1) + … + Pr(A|En) Pr(En)

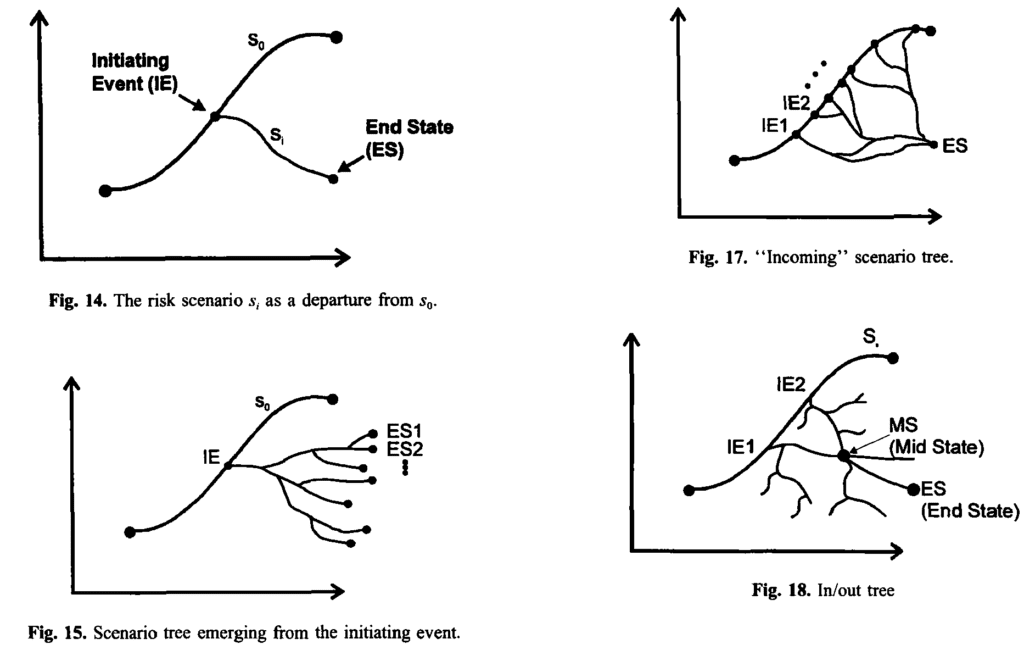

In real-world problems, there may be multiple, mutually exclusive, ways to trigger an event A. These exhaustive, mutually exclusive events are denoted as E1, …, En (sometimes referred to as initiating events). Then the probability of A can be evaluated separately using the old ‘divide-and-conquer’ wisdom.

Bayes Rule

Many people underestimate the value of the Bayes formula. This time, I introduced it by introducing a toy problem first:

A city is served by three overnight mail carriers called A, B, and C. The past record indicates that they fail to deliver the mail on time 1%, 2%, and 3% of the time, respectively. If the overnight letter arrived late, what is the probability that it was sent via A? via B? via C?

The background information was intentionally missing. This aligns with the historical development of the so-called inverse probability problem. Knowing Pr(A|B), how do we find Pr(B|A)?

The value of the Bayes formula is not the mathematical formula itself, but lies on the interpretations of Pr(A) and Pr(A|E), with E representing the new ‘evidence’ and A the event of major interest.

With new evidence E arriving, how do we update our belief of A? There are two layers of issues here. The first layer is that A and E must be dependent somehow. If they are independent of each other, the new evidence does not provide any new information about A, and hence there is no updating.

The second layer of significance is the ‘outside of box’, or systems viewpoint. To complete the updating, we need to look at not only how A would affect the occurrence of E, but also other ‘reasons’ that may trigger the occurrence of E as well. Only through this holistic assessment, can one properly update their belief on A.

Dr. Abusamra (

Dr. Abusamra (